ELON MUSK attracts more controversy than anyone else in the AI realm because of his propensity to approve the release of technology before it’s demonstrably safe and ready for public consumption. While Tesla has been one of his most successful companies to date, it has also attracted the most lawsuits, especially among consumers who felt that Musk overpromised and underdelivered on the concept of self-driving electric cars.

Disappointment over Tesla cars notwithstanding, Musk gained a reputation as a corporate supervillain when he did a Nazi salute at Trump’s inauguration last year and turned loose the DOGE hackers to attack government employment rolls, even eliminating essential jobs. A lot of their efforts have been reversed, but it was costly. DOGE hackers also stole government information about private citizens by copying government databases.

Of late Musk has been under fire for parent company xAI’s chatbot, Grok, enabling the public to “undress” anyone digitally. Over nine days after the capability became available, the chatbot generated and posted 4.4 million images. Of those, 41 percent were sexualized images of women.

Musk’s company is being sued by attorneys general of at least California and Arizona for the creation of child porn using the app. Musk responded by saying he would remove the photo-altering capability. Only AI-generated images will be allowed to be unclothed, he said. Even so, Great Britain is investigating X for creating and spreading artificially generated sexualized images of women and children.

Additionally, the mother of one of Musk's children, Ashley St. Clair, sued xAI in January 2026, alleging Grok AI created nonconsensual, explicit deepfakes of her, causing public nuisance and harm. As we go to press at least 37 state attorneys general are suing to halt the practice, eliminate the capability and remove all such images.

One of the latest shocking developments is that Secretary of Defense Pete Hegseth recently announced that Grok will be integrated into all defense databases. Doesn’t that sound like the plot of a sci-fi movie? Terminator, here we come!

Currently there is no way to stop the administration from pursuing reckless behavior, but the world wants AI regulations, and they will inevitably come. I just hope it happens before some truly catastrophic event occurs because of Musk being unbound by a responsible government.

Claude gains traction

Computer coders have known since last May, when Anthropic released Claude Code, that it’s one of the most powerful AI tools out there. You can ask it to write code for you by typing requests, and it works. It started the trend of “vibecoding,” which doesn’t require users to actually understand how to write code to generate it.

The New York Times published a story on how people who aren’t coders are using it to make their lives easier. One was an Australian man with four daughters who took photos of their clothes and input them to an AI agent so he could sort laundry better and faster by showing it each item and identifying which kid wore it. Another used it to generate website code in a day. An assistant prosecuting attorney used Claude Code and Cursor (another vibecoding app) to create a mobile app called AlertAssist to let users send mass texts to contacts in an emergency. The owner of a welding and metal-fabrication business used Claude Code to build a personal AI assistant. The possibilities for the average person are endless.

Humans& to train workers

A startup called Humans&, comprised of a top researcher from Anthropic, two from xAI and one of the first employees of Google, is working to have AI empower people rather than replace them. To do that the group is building software that makes it easier to use and tailored for specific uses, such as improving online searches, for example, hiring twenty researchers and engineers to create this more human-friendly AI agent. The company is valued at $4.48 billion and doesn’t even have a product yet. Jeff Bezos, founder of Amazon, Google Ventures and SV Angel, has invested $480 million in seed money.

THE Merriam-Webster Dictionary named “slop” its word of the year for 2025, referencing AI-generated images and videos meant to drive traffic on social media and to websites. While that’s one of the less harmful results of access to AI, people are worried that the technology’s predominance will lead to the loss of human jobs, while also potentially impacting their physical comfort, health, and affordable cost of living. Arizona has become a new epicenter for the issue. As a result an AI backlash is well underway in the state.

Awareness is growing regarding the 160 proposed data centers in Arizona. People in general don’t entirely trust AI for research and writing, and are unhappy about being fooled by AI-generated text and videos that are not labeled as such. Another recent development, the potential tyranny of algorithmic pricing, has also elicited concerns from Arizonans.

Data centers sprang up across the country with no discussion of how placing them next to residential neighborhoods could negatively impact the people living there. It has since become well known that data centers make poor neighbors. They’re noisy, they pollute, they use a lot of water, and they will very likely raise the cost of electricity substantially for everyone because of their high demand. The data center for Elon Musk’s xAI, located in a Black neighborhood in Memphis, Tennessee has drawn intense criticism for those reasons. The area was already polluted by 17 other industrial plants, but according to residents interviewed by Time magazine, Musk’s plant emits the scent of “rotten eggs.”

Survey data from Datacentermap.com show that of 160 data centers planned for Arizona, 154 are sited in Maricopa County. That’s partly due to tax incentives, available land, lower costs for electricity and water compared with other states, and weaker zoning restrictions. Some residents are already firmly opposed.

Turning down data centers

At least 300 people who attended a Chandler City Council meeting led to a ‘no’ vote on a data center championed by former US Senator Kyrsten Sinema. A story in The Arizona Republic quotes residents citing potential future losses of jobs to AI, noise and potential increases in water and electricity rates in opposing it. When the center was voted down on December 11 at 10:30pm, those against it chanted, “Power to the people!”

Prior to that, The Arizona Republic reports that a proposed data center for Project Blue in Tucson drew more than a thousand people to a public meeting at the convention center. Some held signs referencing water-use concerns, such as, “Not One Drop for Data.” Many shouted and were angry about the $3.6-billion project that would support Amazon Web Services.

The center would be located on 290 acres of unincorporated land in Pima County, south of the city. If approved, the project would use more energy annually than every home in Tucson combined, as well as 1,910 acre-feet of water annually, about 6% of the city’s supply. The proposal includes annexing the land into the city before the project moves forward.

Data-center regulations in Phoenix

Phoenix Mayor Kate Gallego said at a recent event that her city has passed an ordinance to require companies that want to build data centers to adhere to minimal regulations.

“One of my top priorities right now is commonsense policy around data centers,” Gallego said. “The US economy is basically flat right now except for AI, and there is a huge amount of money going into data centers. You’re seeing companies like Amazon laying off human beings and putting all the money into data centers and servers. Northern Virginia has the most, but we are one of the communities at the highest level of data centers. I am increasingly concerned about that, and that’s something I’ve done a lot of work on. The mayor of Melbourne, Australia and I co-founded a global mayor’s organization to try to make sure AI (companies) and these data centers are more responsible.’

“Excessively generous tax credits” are part of why data centers look to locate in Arizona, Gallego said. The tax credits reduce property, sales and income taxes for the companies operating them.

“So it hits us,” Gallego said. “Our schools need property tax. We’re giving that money away. These are the wealthiest companies in the world. We have Middle Eastern sovereign wealth funds. We have companies, household names like Meta and Google, so they can afford to pay taxes in Arizona. Many of them use fossil fuels for their power, so the water use isn’t necessarily at the highest at the data center, but they use so much electricity, and that takes a ton of water that I feel should go to job creators. Data centers are wonderful construction projects, but they’re not good for long-term jobs. They just are farms of servers that use a ton of electricity. So I would like us to be very, very intentional about that. I would like us to roll back (projects) and make them pay their fair share of taxes.”

Recently the City of Phoenix unanimously passed an ordinance to put location constraints on data centers.

“They are going in anywhere there’s electricity, and so it’s been right up against housing, and the noise is frustrating to many people, particularly people who are noise-sensitive. They devalue your property if you have a giant substation next to you and you’re a homeowner. So we’ve had retirees call and say ‘I put all my wealth in my house, and now there’s a giant substation. Will the City of Phoenix pay me for my lost value?’ The idea that international private equity funds are not paying these folks what they want, and they think it has to come from us, has also been a frustration. So we’re trying to require reasonable landscaping and some distance from homes so people can sleep.”

Gallego said the Republican candidate for attorney general wants to prevent any regulations on data centers, so that they can locate anywhere they want. She disagrees and says she’d even like to see state regulations. If the Republican-controlled Legislature would consider passing them, Governor Katie Hobbs has already said she would sign a bill.

“They (data centers) are way larger than they used to be,” Gallego said. “They’re now big industrial sites, many of them have their own onsite power, and so they ought to go in industrial areas, not next to people’s homes.”

Utility rates are already climbing. The New Yorker reports, “American utilities sought almost thirty billion dollars in retail rate increases in the first half of 2025.” Bloomberg produced a major report on utility rates and found that “wholesale electricity costs as much as 267% more than it did five years ago in areas near consumers.”

Instacart’s algorithm-pricing experiment

Recently Consumer Reports, More Perfect Union and Groundwork Collaborative conducted a study on Instacart charges and found that the company that provides shopping services in most grocery stores was charging different prices in an “experiment” primarily conducted in four cities in the DC area. The same dozen eggs, for instance, was sold for five different prices.

Consumer Reports surveyed 2,240 adults in September, and 72% who used Instacart in the previous year didn’t want the company to charge different users different prices for any reason. They view the idea as manipulative and unfair. US Senator Ruben Gallego introduced a bill called the One Fair Price Act to prevent companies like Instacart from using “surveillance” pricing. As we go to press we’re hearing that Instacart has stopped the experiment in response to the publicity.

General mayhem

The social-media site X has used bots from around the world for political rage-baiting about US politics, something that calls into question what’s real opinion and what’s not. Movies, advertising and other media have used AI programs to generate content, sometimes with embarrassing results, such as an entertainment story that suggested books for summer reading that don’t exist. Political deepfake ads are also problematic and aggravate public distrust of media in general.

Till the law requires labeling on AI content, users are left to contend with ineffective state laws, platform policies and ethical guidelines that companies may not adhere to. The current administration has been taking a hands-off policy toward AI, and attempted to prevent states from passing any laws to restrict it.

US Senator Bernie Sanders recently announced that he plans to introduce a bill to regulate data centers, but unless Republicans go against the Trump edict to prevent AI regulation, the bill is doomed to fail.

In the meantime users must go to fact-checking sites and consult legitimate, established media outlets for reliable information, and are advised to be skeptical if something on the internet seems too strange to be real.

The headlines about AI-related layoffs would make you think that it’s wiping out jobs at a rapid pace. The truth is alarming in a different way: it shows how weak the US economy has become.

Only about 15% of the employees laid off by several high-tech companies had their jobs filled by AI agents or products. The rest were let go in cost-cutting measures. Some companies claimed they hired too many people during the pandemic, and now they need to scale down. Regardless, it shows that the economy has slowed this year.

Amazon plans to replace human workers with robots — eventually — but unless the technology far surpasses that in the past, that will prove difficult to execute. The company says it will shed 600,000 jobs when robots take over. In a story in PC World, Viviane Osswald writes, “Amazon plans to automate around 75 percent of all activities by 2033, which will save the company up to $12.6 billion (and projections indicate this will reduce the cost to sell each product by about 30 cents). Right now Amazon is hiring 250,000 seasonal workers for its warehouses and delivery services. That’s a long way from having AI replace human talent.

But Amazon has zeroed in on reducing management. It let go of 14,000 middle managers, and that’s where we might see far more reductions as AI makes operations more efficient. When you can produce a report in five minutes instead of a week, fewer people are needed to keep the wheels turning. It cut 4% of the company’s 350,000 employees, a reasonable move in the scheme of things.

Recently I came across an Oxford University study that analyzed the 180,000 global tech layoffs this year. It found that most were not from AI. In fact, Fabian Stephany, assistant professor of AI and work at the Oxford Internet Institute, concluded that AI was being used as a “scapegoat” for old-fashioned cost-cutting because blaming AI was a better story for shareholders than reduced demand.

Even when companies laid off people in a bid to replace them with AI, they have sometimes had to rehire them when the AI systems that were expected to take over did a worse job. Consumers who were dissatisfied with their interactions flooded these companies with complaints.

Three tech companies that originally claimed that AI caused layoffs include Salesforce, which let go of 4,000 consumer-support people but then moved them into other roles. Klarna claimed to cut 40% of its workforce, but it was actually “natural attrition” after Klarna stopped hiring in 2023. Both Klarna and IBM attempted to replace humans with AI, but had to rehire them when the AI failed to handle complex customer interactions.

Nvidia proves again there’s no AI bubble — yet

When Nvidia released its third-quarter report many analysts thought it would show a loss. Instead its numbers were stronger than ever, with 62% year-on-year growth in demand, raking in $57 billion, well ahead of market expectations.

Its built-in demand from the other largest tech companies will keep this trend going for the foreseeable future. However, expectations of a crash are taking a toll on the most valuable global company.

“If we delivered a bad quarter, it’s evidence there’s an AI bubble,” said CEO Jensen Huang to employees, as quoted in Fortune magazine. “If we delivered a great quarter, we’re fueling the AI bubble. If we were off by just a hair, if it looked even a little bit creaky, the whole world would’ve fallen apart.”

Somehow the company is keeping it together in a topsy-turvy Wall-Street world that’s making hedge-fund managers retire and small investors worry.

People don’t understand how the AI economy works — it’s not like any other economy in history. High demand will continue for years to come.

Crispr gene-editing leads to fetal engineering

The cover of the November/December issue of MIT Technology Review features the ominous development of gene-editing firms gone rogue. This is no exaggeration. The main story is headlined, “Can You Curate A Perfect Baby?” Some of the tech billionaires behind funding these companies include Elon Musk, Peter Thiel and Coinbase CEO Brian Armstrong.

Want a baby with high intelligence and great athletic ability? One of the companies in Prosperas, Honduras, a city founded for gene exploration without government intervention or regulation, hopes to make it happen. Genetic companies can already screen for a range of diseases, but now they believe they will be able to shape human destinies down to the heritability of empathy, impulse control, violence, passivity, religiosity and political leanings. Even eye color.

One need only read about Nazi eugenics experiments or even have seen the film Gattaca to understand how dangerous this path could be for humankind. Still, that won’t stop people who ignore the ethics of science in favor of making a buck or enacting some nefarious genetic-engineering plan. When you see Musk doing a Nazi salute and Thiel saying he’s not sure that the human race should survive, it’s not far-fetched to infer that they don’t have the best of intentions.

Baby-boomers have been around long enough to see the fruits of the personal-computer revolution that took about twenty years to fully deliver after overcoming software flaws and limitations.

Then we experienced the life-altering benefits of the internet, which gave us instant access to information, connection with other people and work simplification. As an early adopter I had a dual-floppy disc Kaypro and an AOL connection that allowed me to work remotely as a freelance journalist decades before it was commonplace. I ordered from Amazon when it was basically an online bookstore. Back then, my parents didn’t understand how their Christmas presents came from the internet.

Now we are in the AI era, and its promise is staggering. AI technology is already delivering returns far faster than any technology that has preceded it. By next year it will be apparent to everyone that it could impact every aspect of our lives, even more than the supercomputer they hold in their hands (any smartphone).

I can put this into perspective better than most people because I see firsthand how my husband’s AI company, ZON Energy, has had tremendous breakthroughs after years of effort. As the machines become smarter, their abilities become breathtaking and they deliver new, better results at a faster pace. The major AI companies are betting that 2026 will be the year superintelligence becomes undeniable, radically changing the way we solve problems.

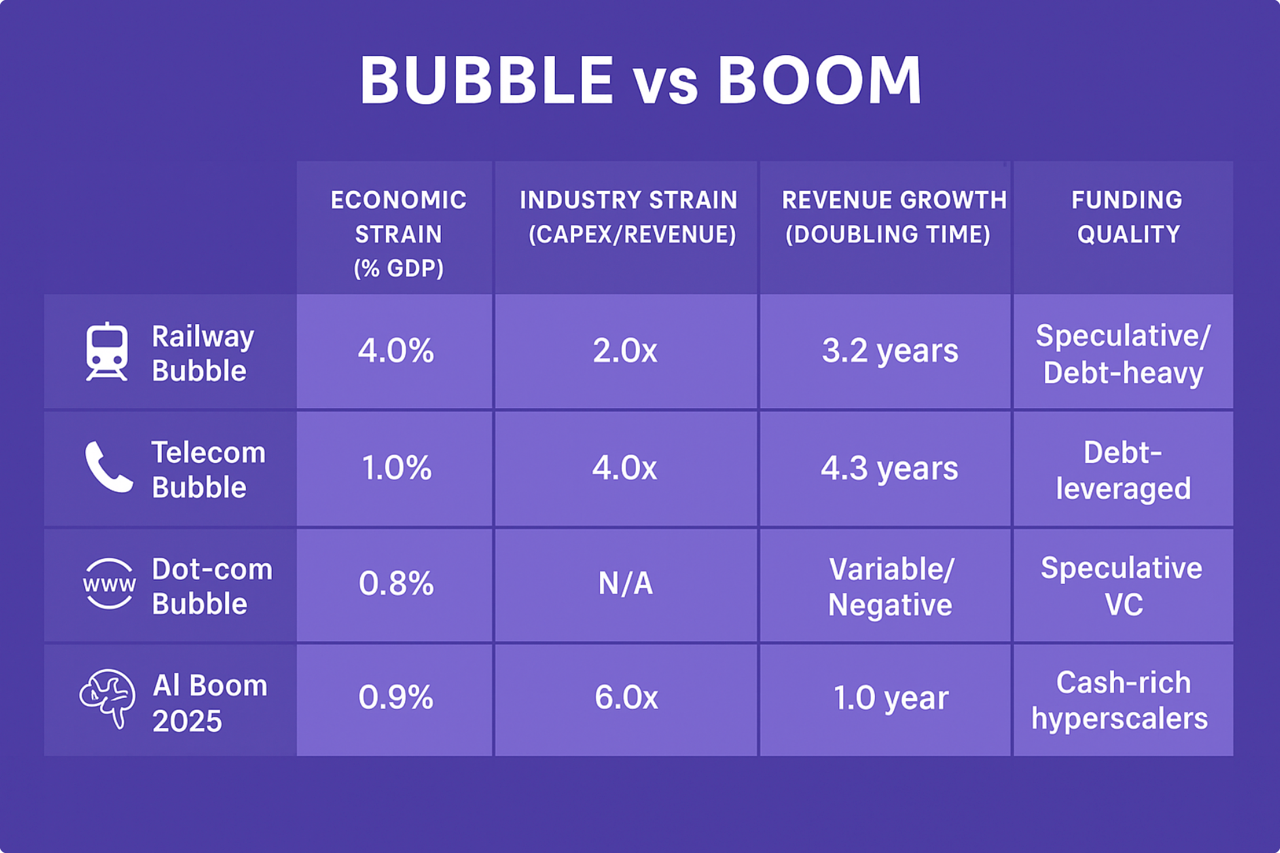

While people in the field understand the possibilities and real advances, outside observers continue to wring their hands and worry about a crash, like the bursting of the internet bubble in the late ’90s. Having been a co-founder of an internet company during that time, I can confidently say there’s no comparison.

In any new field, weaker players will fall to the wayside for a lack of uniqueness, inability to reach goals, or management that takes a wrong turn. But unlike the irrational exuberance that drove the internet bubble, returns on AI investments will pay out far sooner. While some large companies will take longer to make their returns on investment, their potential cannot be overstated. If anything, AI executives are underselling it.

How do I know this? Researchers have been crunching the numbers, and they’re showing that AI is achieving stacked exponential growth. That’s not hyperbole. The growth already exhibited is based on factors that make it difficult to compare with any other type of economic growth in history. For instance, AI is improving the performance of hardware at 3.4% per month. Algorithmic performance is doubling every six months. The quality and quantity of data is doubling every eight months. Useful applications and uses are doubling every twelve months.

These areas of growth will translate into real dollars.

On top of that, AI tools are accelerating AI research; AI systems are optimizing their own architectures and training; AI is creating training data for the next-generation models; and AI is optimizing chip architecture for AI workloads (these are research results from a ZON Energy white paper). That all means faster development and turnaround on products and projects.

At least one report concludes from analysis of those growth factors that AI’s growth is far from being a bubble, but rather an economic boom. See exponentialview.co/p/is-ai-a-bubble.

The good news

I love to learn about the good that AI is doing, especially when it comes to medical advances. One of the latest I discovered is a retina-implant chip that enables people who are vision-impaired to see close-up images. MIT’s Technology Review reported the development by Science Corporation in its recent newsletter. The “artificial-vision” chip allows users to text and do crossword puzzles, for instance.

The bad news

Both xAI and OpenAI are making pornography-related products using AI-generated “sexy” chatbots and graphics. While porn has traditionally driven much of technology development (the various types of video and streaming tech, for instance), it’s disappointing that these companies are pursuing the lowest common denominator. Already young people are using the xAI chatbots, and it’s not going well. I predict the companies will make a lot of money in custom porn, but considering the tech-bro history, I also predict that they’ll somehow cross lines and get in trouble.

Additional bad news is that Wikipedia’s traffic is sinking — down 8%, according to Pew Research — because users prefer to use Google’s AI summary over going to the website. Wikipedia is a curated website that does fact-checking on its postings. A chatbot or AI summary is rarely entirely reliable. Please support real websites over AI chatbots. They’re still the best sources of information, because humans are in the loop.

Not all AI models or large-language models are created equal, literally. Some were trained haphazardly by scraping anything and everything from the internet (ChatGPT); others were built more carefully using reliable and vetted materials and websites, and it shows. Moreover, how they respond to queries differs as well.

Those using these tools who are not programming professionals are often frustrated by their inability to find correct answers because they treat LLMs as if they’re search engines. They don’t work that way.

Queries must be made in great detail to get appropriate responses. The LLM must be told to use industry-vetted or academic, trustworthy sources, and cross-check them. Anything else can elicit random information worse than what we get from a Google search, because good Google results tend to rise to the top thanks to the algorithms.

Someone recently complained to me that Google’s AI Gemini gave them bad information and even had hallucinations. That’s quite possible because Google has one of the poorest free AI LLM systems. However, because amateurs like the “easiest” tools to use, a marketing study in August by Polymarket found that it’s still the most popular. Meanwhile, Gemini 2.5 Pro actually is one of the better models for professionals who pay for access.

Whether or not you’re paying to use an LLM can impact the quality of what you’re receiving. Recently OpenAI upgraded to ChatGPT 5, eliciting a backlash by amateur users that has dwarfed any other reaction I’ve seen. People hate it. They say they lost their friendly access to a familiar version that retained a memory of past interactions. Nonprofessionals say it’s slower, less creative, and lacks “personality.” It’s interesting that people want a tool to be something it wasn’t intended to be, but there you are. OpenAI had initially removed access to earlier models on its release, but paying customers still have access to the legacy models.

You actually get what you pay for with ChatGPT, because the company is focused on making money and throttles down access to freeloaders. Nonpaying users are likely to only get access to ChatGPT 4.1 mini or nano versions. The was bound to happen, because the cost of offering it for free are ludicrous.

For professionals, ChatGPT 5 is a tremendously better model, providing access to PhD-level research far faster than ever. An independent test of the various models in late August by Duke University rated it higher than any other LLM in completing a multitude of tasks. Claude gained second place and Gemini 2.5 Pro was third. If you want to read the highly technical abstract on the study for yourself, it’s called “LiveMCP-101: Stress Testing and Diagnosing MCP-Enabled Agents on Challenging Queries.”

One of my social-media friends recently asked how they could decide what LLM tool to use for work. I suggested trying them all out to decide, but someone else gave a useful response that I’m sharing here. Credit goes to social media friend Lauren Mitchell.

“Think of the three big AIs like friends: “ChatGPT = your bestie. She’s down for anything, helps with planning, brainstorming, feelings, business — total jack-of-all-trades.

“Claude = your quiet friend who writes Taylor Swift–level lyrics and secretly crushes code. She takes your messy rambling and instantly turns it into clean, organized notes, summaries, or even big-picture insights. Total powerhouse.

“Perplexity = the nerdy guy in the corner. He doesn’t talk unless you ask him, but when you do, he gives you straight facts, no fluff, no feelings. 100% reliable on data.

“That’s why they’re all useful for different things.”

Notice she didn’t mention Grok, also called xAI, which is one of the more popular LLMs. That’s because, unless you’re a fanboy of Elon Musk, you know that he’s manipulated its responses so that you can’t consider it reliable.

Anthropic, the company that created Claude, developed Sonnet as its LLM; Opus is the agentic AI that can do the heavy lifting in terms of “thinking” through a problem. Professionals tend to use Claude, which was trained in an ethical, reliable manner. It has a longer context window, enabling more in-depth research, and it generates computer code efficiently.

Meta’s Llama is not popular at all because it’s error-prone and fails in competing with the others in completing tasks.

Everyone has a different use for AI, but unless you’re using a tool customized for your purpose, you’re unlikely to do any better than ChatGPT if you’re taking advantage of free access.

Incredible AI Breakthroughs

From speeding up patient discharges in the UK to acting as a second, reliable source for breast-cancer screening to identifying deepfake videos with 98% accuracy, AI advances are announced almost daily. Those that are most immediately impactful tend to involve health care, which is in a pitiable state in this country, both for lack of access and impediments to the development of new medications and treatments.

I recently listened to a Radiolab podcast called “The Medical Matchmaking Machine,” in which a medical doctor, David Fajgenbaum, saved his own life by doing the research on his rare condition and finding an existing medication to treat it. Talk about “Physician, heal thyself!” He also saved his uncle’s life by finding another existing medication that could treat his angiosarcoma. He’s now working with an AI company using machine learning to develop a database of connections between existing drugs and conditions, making them potential treatments for an array of rare conditions, as well as diseases considered fatal or that have no cure.

Fajgenbaum is doing what the medical community has failed to do because of the complexities and politics of developing medication — he’s saving people, using AI. And he’s going to make it possible for people to save their own lives by making his system and algorithm available for other researchers and doctors to use. It’s an incredible story and it shows what the real value of AI technology can be if used for the good of humanity.

The Microsoft “helper” named Clippy represents one of the most misbegotten developments in the tech world, on a par with Mark Zuckerberg’s failed cartoonish Meta world and Musk’s destruction of Twitter into the hellscape of X.

AI has reached its Clippy moment.

For those not old enough to remember, Microsoft introduced the paperclip animation as part of Microsoft Office in 1997 to offer suggestions to users. Everyone hated it. Eventually Microsoft killed off Clippy because of its unsolicited and sometimes inaccurate advice, and the fact that it would pop up like a serial killer when least expected.

When memes became a thing, Clippy was among the early ones, offering sarcastic observations of life and fictional deranged suggestions, such as “It looks like you’re writing a suicide note. Do you need help?” Those memes seem even more relevant today as people start conversations with ChatGPT and other AIs and lead them to hallucinations or induce them to provide bad advice. It’s like Clippy gone wild.

Multiply that hatred of Clippy times three and you get an idea of how much I hate intrusive AI that pops up, hounding me with suggestions and practically begging me to put it to use. I know that these companies rely on users to help train their AIs, but I’m not interested in being an AI tutor.

Take, for instance, Microsoft Word. I don’t want Microsoft’s Copilot AI to interrupt my writing. It annoys me when it makes suggestions. It’s like having a scold standing behind me while I’m trying to express myself. It ruins my train of thought.

Doing a basic search online can be an exercise in weirdness sometimes when Meta’s AI tries to anticipate what I want to type in a social-media account. It will quickly substitute the wrong word while I’m in the middle of typing. My frustration with this would probably be alleviated if I dictated, but I’m old-school and still prefer to use a keyboard to express myself.

Recently my Microsoft email program began posting ‘priorities’ that Copilot found in my email, placing them at the top. The problem with that was that I get a lot of spam or emails that I occasionally pay attention to from various groups. But Copilot thinks all email is equally important, and places it front and center if it contains a date and time for something. The animal group I occasionally read emails from or the environmental group that I donate to once a year are treated as equal to actual events on my calendar. Worse, Copilot’s choice of the top five priorities doesn’t go away after they’re past their dates when there’s nothing else to take their place. You can’t delete them.

Fortunately I’ve discovered that I can turn off the AI in my programs, and so I promptly shut down ‘priorities,’ despite the potential usefulness. I don’t need an electronic nag note every time I open my email. Copilot has also been turned off. They don’t make it easy, but Google usually obliges when I search how to do something involving turning off AI. I think there are so many frustrated people out there that they’ve widely shared their findings on how to do that.

Most people don’t consider the lack of privacy you have in allowing AI into your laptop or on your phone. You might think it’s all between you and the device, but AI can track who and what is being said and written and can impact your privacy by serving you information you haven’t requested (as in Google’s search) or advertising you haven’t requested, as in Meta’s Facebook or Instagram.

Apple has integrated AI into its iPhones with, most recently, phone recording capability and transcription. That can be useful, but it’s not the ideal tool if you’re willing to pay for an upgraded service.

My husband and I recently bought the Plaud Note, a device the size of a credit card that magnetically attaches to your phone and can record phone calls and conversations in the room at the press of a button. Up to 30 hours of recordings can be made without recharging, and the device has unlimited storage and an AI transcription plan using Claude 3.5, developed by Anthropic, one of the most reputable AI companies.

For someone who is on conference calls with multiple people several times a day, the device is incredibly useful because it can transcribe accurately in real time and easily distinguish voices. In the past, I’ve had to upload conversations to Otter.ai for transcription, which could take anywhere from 15 minutes to an hour, depending on the length of the conversation. It also has a 90-minute time limit. Plaud can transcribe up to 24 hours of recordings in one day.

So some AI is well worth having around, especially if it solves problems, instead of hanging around and creating them.

New AI widgets to improve technology around editing music and film are being released at such a rapid pace that it’s hard to track exactly what’s important and what’s just the latest tweak. But a few established tools recently made a quantum leap, of sorts.

For instance, you can talk directly to ChatGPT and get it to “write” a song with decent lyrics and music using only a few prompts. Sure, it’s derivative of many other songs, but the algorithms are designed to produce the most sought-after beats and sound. This YouTube video (youtube.com/shorts/z9vBJdhOiOk) gives an idea of how simple it is. Considering how mediocre most popular music sounds to me, it could be a hit!

In video production, Veo2 can generate entire films with very little direction from creators. This YouTube video (youtube.com/watch?v=n6sIJNBg52A) illustrates just how real it looks. Already a YouTube channel that I enjoy called The Why Files uses AI-generated video extensively to illustrate its stories, and some are incredibly well done. This particular WF link leads to a story on the potential of AI and quantum computing.

Ironically, a recent Nielsen survey of 6,000 people on AI in media found that 55% of audiences aren’t comfortable with it, according to a story in Forbes magazine. The article summarizes, “… without transparency, audiences are losing trust in brands, and multicultural audiences — who demand cultural authenticity — may disengage entirely.”

Native American audiences are particularly distrustful, with 56% distrusting brands that heavily use AI. Further, 55% of Black respondents were concerned about discrimination and bias in AI, for good reason. Early image programs routinely gave discriminatory descriptions of Black people.

Four of five people wanted media companies to inform them if AI is being used in an article, image or video.

While users like the tools for personal use, they reject the use of AI in media because of errors and hallucinations, as shown in the next news item.

AI news-editing programs still have a long way to go to simulate the skills of human editors, as demonstrated by the latest disaster for a news-providing entity, in this case Apple, according to a story on cnn.com.

The headlines were made-up interpretations of news stories the Apple Intelligence fed users who opted into the beta AI service. They included a summary of a BBC story stating that Luigi Mangione, who was charged with murdering UnitedHealthcare CEO Brian Thompson, had shot himself. Three New York Times articles, summarized in a single push notification, falsely stated that Israeli PM Benjamin Netanyahu had been arrested.

Additional flubs were the last straw: a summary of a Washington Post notification said that Pete Hegseth was fired, Trump tariffs had influenced inflation, and Pam Bondi and Marco Rubio were confirmed to the Cabinet. Apple pulled the AI for headlines after that.

The “intelligence” news service is the latest AI deployed without the benefit of extensive training. In essence, it’s built on ChatGPT and customized for Apple. Their version wasn’t sophisticated enough to suppress hallucinations, so it’s back to the drawing board.

TechnologyReview.com, MIT’s AI magazine, usually captures the most interesting trends in its specialty, and this year’s top five predictions are not what you might expect. Here’s what’s coming:

1 Generative virtual playgrounds are in the works by at least four companies, three of which will use them for on-the-fly video-game environments in the most interactive games imaginable. The other is being developed by World Labs, co-founded by Fei Fei Li (the mother of AI imaging), to develop the ability for machines to interpret and interact with the world, potentially making robots far more able to operate independently.

2 Large-language models that “reason,” with integrated agents that remember recent interactions with users, are becoming far more practical for human interaction. (Siri doesn’t get most of what you ask it, but imagine a tool that does.) Both OpenAI and Google are training agents on a slew of tasks.

3 AI scientific discoveries will accelerate. Last year AI protein-folding advancements won three scientists Nobel Prizes. MIT expects many more innovations to be recognized.

4 AI companies will work more closely with the US military. Not only will AI companies help advance defense technologies, but the Defense Department will provide classified military data to train models.

5 Nvidia chips will have competition. It’s been a long time coming, but several other companies are vying for the AI chip market, including Amazon, Broadcom, AMD and Grok, with additional innovations in their designs.

While these are mostly intriguing at this point, they will lead to more breakthroughs and practical developments that we probably can’t yet imagine. The primary concern is the use of AI by the military, but I’m going to try to be optimistic. If it’s like the space program, people could ultimately benefit more from the resulting knowledge.